LLM Context Window Comparison 2026: How to Get Cited by ChatGPT, Claude, Gemini & Perplexity

Complete technical comparison of AI platform context windows, features, citation strategies, and optimization tactics for law firm visibility

📋 Table of Contents

🎯 Key Takeaways

- Context window leaders in 2026: Google Gemini offers the largest readily available context window at 2 million tokens, while Claude Sonnet 4 and 4.5 provide 1 million tokens in beta for qualified organizations, and ChatGPT maintains 128,000 tokens across GPT-4o and GPT-5 models (IBM, November 2025).

- Practical context limitations: Most models experience performance degradation before reaching advertised limits—models claiming 200,000 tokens typically become unreliable around 130,000 tokens with sudden performance drops rather than gradual degradation (AIMultiple, 2025).

- Citation probability drivers: Pages with proper technical optimization (fast page speeds under 2 seconds, clean HTML, schema markup) combined with question-based content structure get cited 40% more often, while original research content sees 4.1× more citations (DreamHost, December 2025).

- Platform-specific citation patterns: Wikipedia serves as ChatGPT’s most cited source at 7.8% of total citations, while Reddit leads for both Google AI Overviews (2.2%) and Perplexity (6.6%), with Perplexity averaging 6.61 citations per response (Profound, August 2025).

- Pricing ranges for API access: As of January 2026, costs range from $0.10 per million input tokens (Gemini Flash-Lite) to $15 per million input tokens (Claude Opus), with output tokens typically costing 3-5× more than input tokens across all platforms (IntuitionLabs, December 2025).

LLM context windows determine how much information AI platforms can process simultaneously, ranging from 128,000 tokens (ChatGPT) to 2 million tokens (Gemini) as of January 2026. Getting cited requires optimizing across three layers: technical infrastructure (schema markup, page speed under 2 seconds), content structure (question-based headings with direct answers, self-contained paragraphs), and authority signals (fresh updates within 30 days, strong backlinks, clear authorship).

The landscape of legal marketing transformed fundamentally in 2025-2026 as AI platforms surpassed 800 million weekly users for ChatGPT alone, with Google AI Overviews appearing on 55%+ of searches. For law firms, understanding how these platforms process information—and how to appear in their responses—has become as critical as traditional search engine optimization. Context windows, the amount of information these AI systems can process at once, directly impact which sources get cited and how comprehensively they can evaluate your firm’s expertise.

Between January and October 2025, AI-referred sessions increased by more than 100% compared to the previous year, with these visitors arriving already convinced because AI recommended them. Yet many law firms ranking #1 on Google remain completely invisible when potential clients ask ChatGPT, Claude, or Perplexity about legal services. This guide provides the technical foundation you need to understand LLM capabilities and the strategic framework for achieving AI platform visibility through Generative Engine Optimization (GEO) services.

InterCore Technologies has specialized in AI-powered legal marketing since 2002, developing proprietary systems for optimizing law firm visibility across emerging platforms. Our developer-led approach focuses on the technical architecture behind AI citations—understanding not just what content AI platforms prefer, but how they process, evaluate, and cite information at a fundamental level. This comprehensive analysis examines context window capabilities across all major platforms, compares pricing and feature sets, and provides actionable strategies based on our implementation of GEO optimization frameworks for law firms nationwide.

Understanding Context Windows: The Foundation

What Are Context Windows?

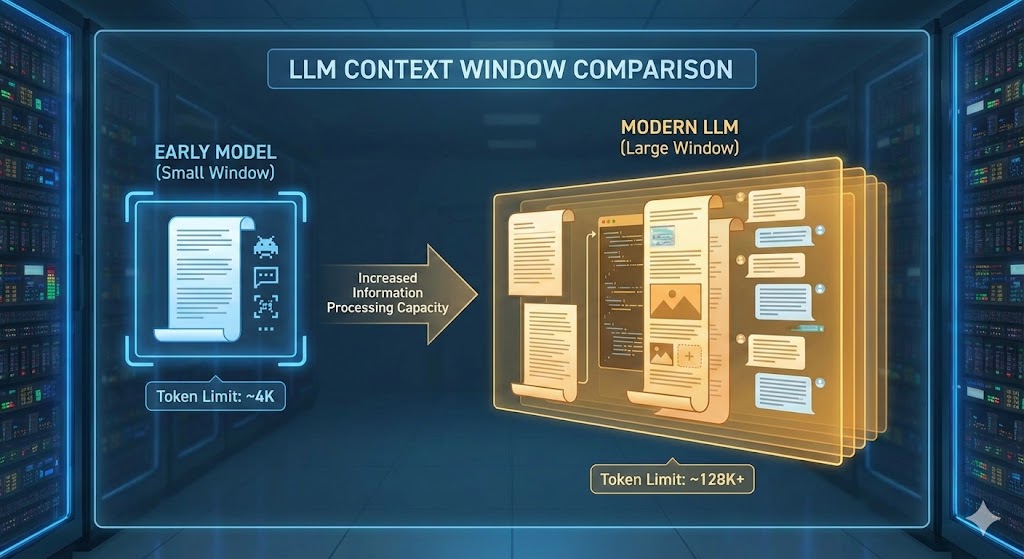

The context window (or “context length”) of a large language model represents the amount of text, measured in tokens, that the model can consider or “remember” at any one time. This functions as the AI’s working memory, determining how long of a conversation it can maintain without forgetting earlier details and the maximum size of documents or code samples it can process simultaneously. According to IBM’s November 2025 technical documentation, a larger context window enables an AI model to process longer inputs and incorporate greater amounts of information into each output, directly translating to increased accuracy, fewer hallucinations, more coherent responses, and improved ability to analyze longer data sequences.

Tokens serve as the basic units AI models use to process text—think of tokens as pieces of words rather than complete words. A simple approximation suggests that one token equals roughly four characters in English, meaning 128,000 tokens approximates 100,000 words or about 170 pages of text. However, token calculation varies by model and language, with technical documentation or specialized analysis providing more precise measurements for specific content types. When a prompt, conversation, document, or code base exceeds an AI model’s context window, it must be truncated or summarized for the model to proceed, potentially losing critical information that could influence the response quality.

⚠️ Limitations:

Token-to-page conversions represent approximations that vary based on content density, formatting, technical terminology, and language. Legal documents with extensive case citations or technical specifications may consume tokens at different rates than standard prose. Additionally, advertised context window sizes often exceed practical performance thresholds—testing by AIMultiple in 2025 revealed most models experience reliability degradation at 60-70% of their advertised context capacity.

Why Context Windows Matter for Law Firms

For legal marketing applications, context window capabilities directly impact how AI platforms evaluate and cite your firm’s expertise. When potential clients ask comprehensive questions about legal services—for example, “What personal injury attorney in Los Angeles has experience with complex medical malpractice cases involving surgical errors?”—AI platforms with larger context windows can simultaneously consider more of your website’s content, including case results, attorney biographies, practice area descriptions, and client testimonials. This comprehensive evaluation increases citation probability compared to platforms with smaller context windows that might only process your homepage and one practice area page.

The practical implications extend beyond simple content volume. According to research published by AllAboutAI in November 2025, models with larger context windows can maintain task continuity across multi-step reasoning, track past actions and goals without requiring reminders at every step, and process sequences of evolving instructions—capabilities particularly relevant for complex legal queries requiring synthesis of multiple information sources. For law firms implementing AI-driven client intake systems, larger context windows enable more sophisticated conversation handling where the AI assistant remembers detailed case information, jurisdiction-specific requirements, and client preferences throughout extended interactions spanning hundreds of messages.

However, context window size alone doesn’t determine citation success. A 2023 research paper noted by IBM found that LLMs don’t “robustly make use of information in long input contexts”—models perform best when relevant information appears toward the beginning or end of the input context, with performance degrading for information positioned in the middle of large context windows. This “lost in the middle” phenomenon has critical implications for content structure, suggesting that even with massive context windows, strategic content organization with clear section delineation and self-contained information units remains essential for AI citation optimization.

LLM Context Window Comparison 2026

The competitive landscape of AI context windows evolved dramatically between 2024 and 2026, with each platform pursuing different architectural strategies to balance context capacity against computational efficiency and response quality. Understanding these differences enables law firms to select appropriate platforms for specific optimization strategies and to structure content that performs well across the entire AI ecosystem rather than optimizing for a single platform.

ChatGPT Context Windows and Limitations

OpenAI’s ChatGPT maintains a 128,000-token context window across its GPT-4o and GPT-5 model families as of January 2026, representing approximately 170 pages of text or 96,000 words. While this context capacity ranks smaller than competitors, ChatGPT compensates through its unique memory feature that stores user preferences across sessions, creating personalized experiences where the AI recalls working styles, project details, and personal preferences from previous conversations. According to testing published by AllAboutAI in November 2025, ChatGPT maintains 58% performance at its full 128,000-token capacity but drops to 16.4% at theoretical 1-million-token extensions, indicating the 128K window represents a deliberately chosen optimization point rather than a technical limitation.

ChatGPT’s “Browse with Bing” feature performs real-time searches for current information, enabling citation of newly published content if it provides exceptional answers. This makes ChatGPT more responsive to fresh content than platforms relying primarily on training data. For law firms, this creates opportunities for rapid visibility on breaking legal developments, recent case results, or newly published thought leadership content. However, testing reported in Reddit discussions analyzed by AllAboutAI revealed that ChatGPT Plus uses retrieval-augmented generation (RAG) for uploaded files, breaking documents into chunks and retrieving only those most semantically related to queries rather than processing entire documents within the context window—a critical distinction when evaluating practical context utilization.

Claude Context Windows and Performance

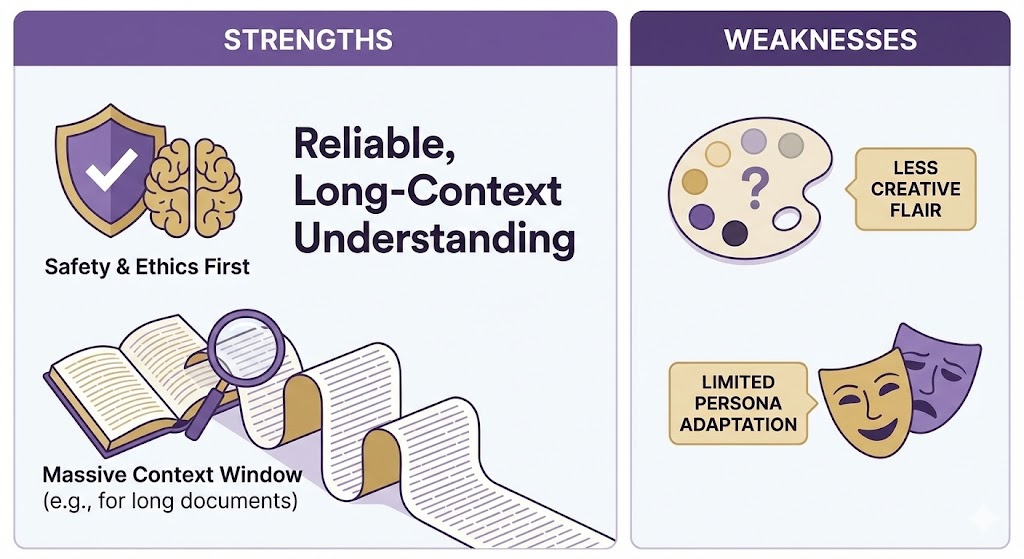

Anthropic’s Claude models offer 200,000-token standard context windows for Claude Opus 4, Sonnet 3.7, and Haiku 3.5, with Claude Sonnet 4 and 4.5 providing expanded 1-million-token capacity in beta for organizations in usage tier 4 or with custom rate limits. According to AIMultiple’s systematic testing in 2025, Claude Sonnet 4 demonstrates less than 5% accuracy degradation across its full 200,000-token context window, showing consistent performance throughout—a significant advantage over competitors that experience more pronounced degradation. Requests exceeding 200,000 tokens incur premium pricing at 2× input and 1.5× output rates, reflecting the increased computational requirements for attention mechanisms that scale quadratically with sequence length.

Claude’s architecture emphasizes precision in long-form comprehension rather than maximizing throughput speed, making it especially effective for research, legal document analysis, and technical tasks requiring detailed attention to extensive source material. For Claude AI optimization strategies, content demonstrating clear reasoning and providing step-by-step explanations with logical flow performs particularly well. Claude’s growing user base among technical and research-focused audiences makes it increasingly important for law firms serving sophisticated clientele seeking detailed legal analysis rather than surface-level information.

Claude Context Window Tiers (January 2026)

| Model | Standard Context | Beta Extended | Best Use Cases |

|---|---|---|---|

| Claude Haiku 3.5 | 200K tokens | N/A | Cost-sensitive tasks, chatbots |

| Claude Sonnet 3.7 | 200K tokens | N/A | Balanced performance, writing |

| Claude Sonnet 4 / 4.5 | 200K tokens | 1M tokens | Enterprise document analysis |

| Claude Opus 4 | 200K tokens | N/A | Complex reasoning, legal research |

Source: AIMultiple, “Best LLMs for Extended Context Windows in 2026,” accessed January 2026

Google Gemini Context Windows and Capabilities

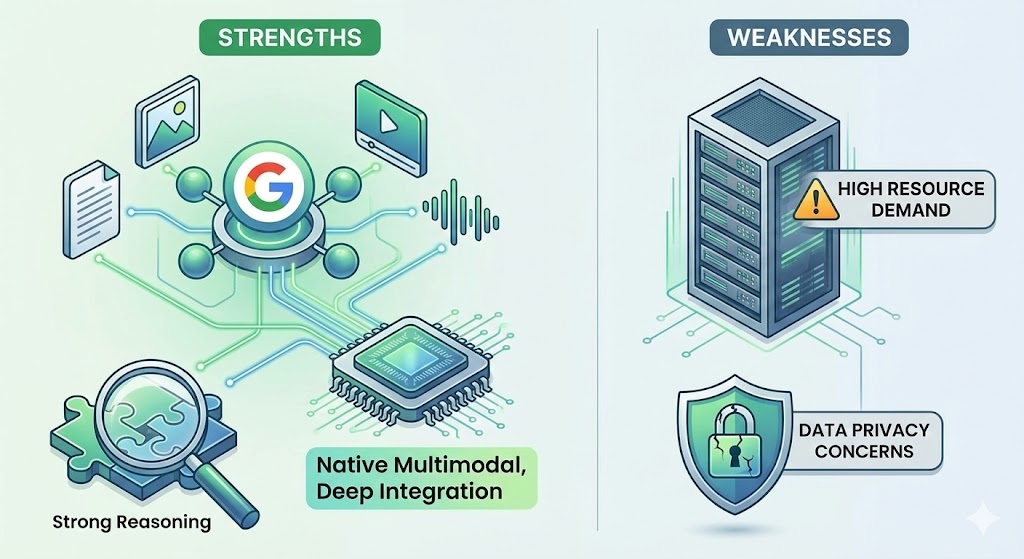

Google’s Gemini models deliver the largest readily available context windows in the 2026 market, with Gemini 1.5 Pro supporting up to 2 million tokens—equivalent to approximately 1,500,000 words or 2,000 pages of text. Other Gemini family models including Gemini 2.5 Flash and Gemini 3 Pro offer 1-million-token context windows with native multimodal processing across text, audio, images, and video. According to Google’s technical documentation, Gemini 1.5 Pro achieves near-perfect “needle” recall (>99.7%) up to 1 million tokens across all modalities, demonstrating robust information retrieval throughout its massive context capacity.

Gemini’s architectural approach differs fundamentally from ChatGPT and Claude through its use of a Mixture-of-Experts (MoE) transformer combined with joint vision-language embeddings. This structure employs sparse expert activation where only a fraction of transformer blocks fire per token, reducing compute cost while maintaining performance. Cross-token clustering groups semantically related tokens into compact latent spaces, while retrieval-augmented compression fetches supporting information from Google’s indexed knowledge graph in real-time. The combination of sparse routing and Google’s retrieval infrastructure enables book-scale document analysis while keeping inference costs manageable—a critical consideration for enterprise deployment at scale.

For law firms, Gemini’s deep integration with Google’s ecosystem provides unique advantages including seamless connection to Google Workspace applications, access to Google’s search index for grounding responses in current information, and preferential citation patterns within Google AI Overviews that appear on 55%+ of searches. However, the massive context window introduces processing time considerations—comprehensive document analysis may require longer response generation compared to platforms with smaller context windows optimized for speed. Strategic deployment involves leveraging Gemini’s capabilities for complex research tasks while reserving faster platforms for real-time client interactions.

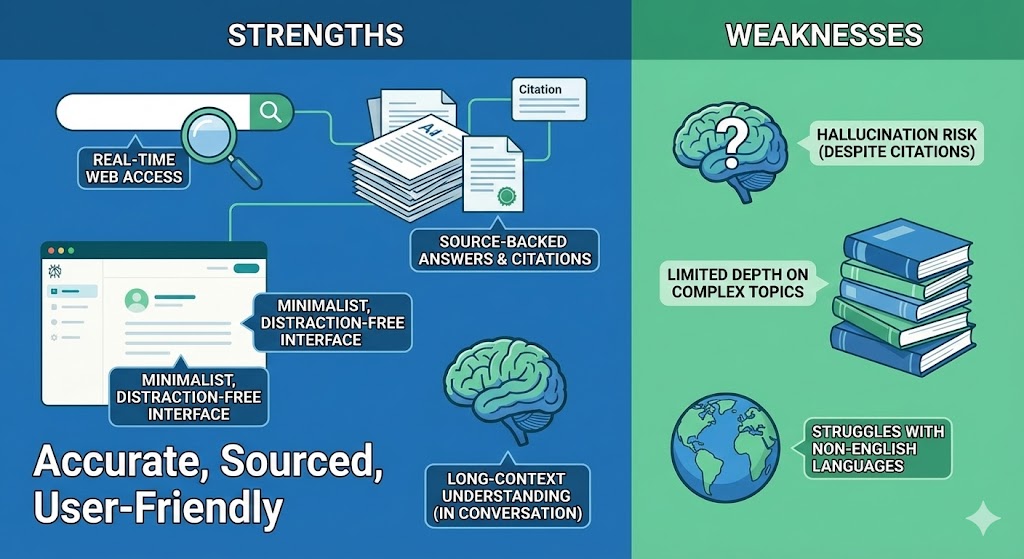

Perplexity AI Context Handling

Perplexity positions itself as an “answer engine” rather than traditional search engine, providing comprehensive responses with extensive citations that average 6.61 citations per response according to August 2025 data from Profound. Perplexity’s citation behavior differs notably from competitors through its emphasis on source diversity and transparent attribution. The platform uses PerplexityBot for web crawling and demonstrates particular affinity for citing community platforms—Reddit represents 6.6% of Perplexity’s top citations, significantly higher than other platforms. For Perplexity AI optimization, consistent content updates yield measurable results, with aggressive refresh schedules (2-3 day cycles) for priority content producing better citation rates than any other platform.

While Perplexity doesn’t publicly disclose specific context window parameters for its underlying models, the platform demonstrates effective handling of long-form queries requiring synthesis across multiple sources. Perplexity rewards content structured with clear E-E-A-T signals (Experience, Expertise, Authoritativeness, Trustworthiness), making detailed author bios with credentials, social profiles, and citations to authoritative external sources particularly effective—this optimization can increase citation probability by 110% according to research published by Siftly in January 2026. The platform’s growing adoption among professionals for research-quality answers makes it increasingly important for law firms seeking to reach sophisticated audiences conducting thorough due diligence before engaging legal counsel.

Microsoft Copilot Context Architecture

Microsoft Copilot leverages OpenAI’s GPT models with Microsoft-specific enhancements, operating with context windows aligned to GPT-4o’s 128,000-token capacity. Copilot’s integration with Microsoft’s ecosystem including Bing search, Microsoft 365 applications, and Azure cloud services creates unique optimization opportunities. Testing published by ADRA Tech Systems in January 2026 found that 87%+ of SearchGPT citations match Bing’s top results, suggesting that strong performance in Bing’s traditional search rankings significantly increases likelihood of Copilot citations. This creates a strategic advantage for law firms already investing in Bing Ads or Bing Webmaster Tools optimization.

Copilot’s citation patterns favor authoritative sources with strong technical infrastructure, clear schema markup, and demonstrated expertise signals. The platform’s enterprise focus means citations often draw from business-oriented sources including LinkedIn professional profiles, industry publications, and B2B review platforms. For law firms, establishing comprehensive LinkedIn presence for attorneys, publishing thought leadership content through Microsoft’s ecosystem, and ensuring proper schema markup across all digital properties creates multiple pathways to Copilot visibility.

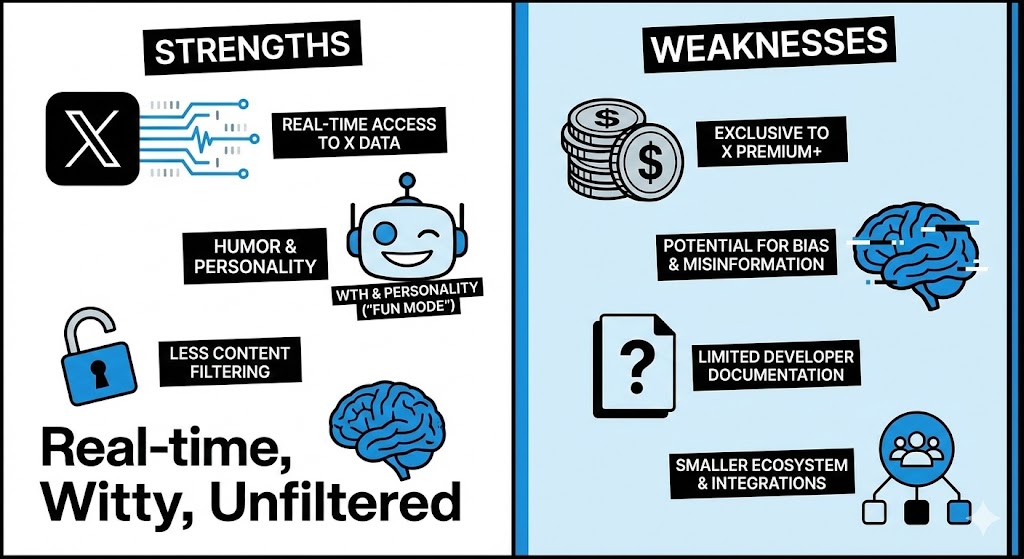

Grok Context Window Overview

xAI’s Grok models offer competitive context windows with Grok 4.1 supporting extended contexts, though specific token limits have not been publicly disclosed in the same detail as competitors. Grok’s integration with X (formerly Twitter) provides unique real-time information access and citation patterns that favor content with social engagement signals. The platform’s positioning as a “scientific” model with emphasis on current events and trending topics creates opportunities for law firms actively participating in legal discourse on social media platforms.

Grok’s pricing structure ($0.20 per million input tokens, $0.50 per million output tokens according to IntuitionLabs’ December 2025 analysis) positions it as the most affordable API option among major platforms, making it attractive for high-volume applications. However, the platform’s newer market entry means citation patterns and optimization best practices remain less established compared to ChatGPT, Claude, or Gemini. Law firms should monitor Grok’s evolution while focusing primary optimization efforts on platforms with proven citation patterns and larger user bases.

Feature Comparison Beyond Context Windows

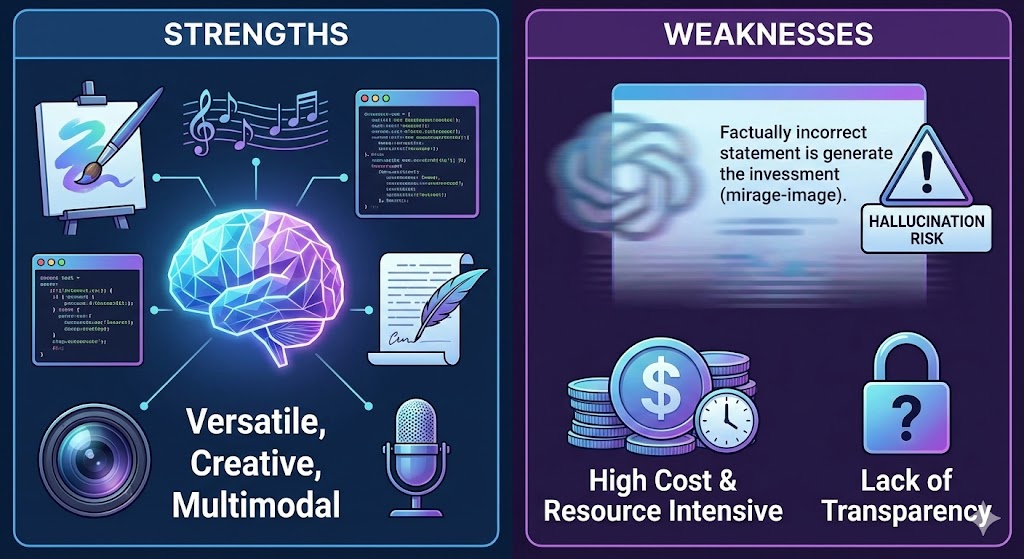

Multimodal Capabilities

Multimodal processing—the ability to understand and generate content across text, images, video, and audio—has become a critical differentiator among AI platforms. Google Gemini leads in native multimodal design, originally architected as a multimodal model enabling seamless extraction of insights from images, videos, and text simultaneously. According to testing published by WEZOM in December 2025, Gemini performs better than ChatGPT in multimodal tasks because multimodality was integrated from inception rather than added later. Gemini 1.5 Pro can process 1 hour of video, 11 hours of audio, codebases with over 30,000 lines of code, or over 700,000 words—capabilities particularly relevant for law firms dealing with video depositions, audio recordings, or extensive document discovery.

ChatGPT added multimodal capabilities through GPT-4o with image understanding, DALL-E integration for image generation, and code execution features. While not as seamlessly integrated as Gemini’s native multimodal architecture, ChatGPT’s multimodal features provide practical utility for creating visual content, analyzing images uploaded by users, and executing code in real-time. Claude has approached multimodality more conservatively, focusing on text processing excellence with selective image understanding capabilities in recent versions. For law firms, multimodal capabilities enable innovative client communication including visual case explanations, infographic generation for social media, and automated analysis of visual evidence in case files.

Output Token Limits

While context window size determines input capacity, output token limits constrain response length—a critical distinction often overlooked in platform comparisons. ChatGPT’s GPT-4 and GPT-4o models cap maximum output at 4,096 tokens despite their 128,000-token input context, while GPT-5 models extend output capacity to 128,000 tokens for certain configurations. This disparity between input and output capacity means ChatGPT can analyze extensive documents but may need to summarize findings rather than providing comprehensive responses matching the input detail level.

Claude models support up to 8,192 output tokens for Claude Opus and Sonnet versions as of January 2026, providing more extensive response capacity than GPT-4 but still constrained relative to input capacity. Gemini models offer up to 64,000 output tokens for Gemini 3 Pro, enabling generation of long-form content more comparable to input document length. These output limits have practical implications for legal applications—generating comprehensive legal memoranda, detailed case analysis, or extensive contract reviews may exceed single-response output limits, requiring iterative prompting or strategic content segmentation.

Pricing Structures

API pricing varies dramatically across platforms, with costs ranging from $0.10 per million input tokens (Gemini Flash-Lite) to $15 per million input tokens (Claude Opus) as of January 2026. According to comprehensive analysis by IntuitionLabs published December 2025, output tokens typically cost 3-5× more than input tokens across all platforms due to increased computational requirements for generation. For consumer subscription tiers, pricing has standardized around $20/month for mid-tier access (ChatGPT Plus, Claude Pro, Google AI Pro) providing flagship model access with usage limits, while premium tiers range from $100-300/month for extended capacity.

API Pricing Comparison (Per Million Tokens, January 2026)

| Platform / Model | Input Cost | Output Cost | Context Window |

|---|---|---|---|

| GPT-4o | $5.00 | $15.00 | 128K tokens |

| Claude Opus 4 | $15.00 | $75.00 | 200K tokens |

| Claude Sonnet 4 | $3.00 | $15.00 | 200K / 1M tokens |

| Gemini 3 Pro | $2.00 | $12.00 | 1M tokens |

| Gemini Flash-Lite | $0.10 | $0.40 | 1M tokens |

| Grok 4.1 | $0.20 | $0.50 | Extended |

Sources: IntuitionLabs API Pricing Comparison (December 2025); Google Cloud Vertex AI Pricing; Anthropic API Documentation

For law firms evaluating API integration, total cost depends heavily on use case token consumption patterns. A conversational chatbot responding to intake questions might consume 10,000-50,000 tokens per interaction including conversation history, while document analysis tasks could consume 100,000-500,000 tokens for comprehensive review. Strategic model selection—using cost-efficient models like Gemini Flash-Lite for routine tasks while reserving premium models for complex analysis—can reduce costs by 60-75% according to enterprise deployment data. Additionally, techniques like prompt caching (supported by Claude and Gemini) can reduce costs for repeated prompts by 75% by storing common prompt components and reusing them across multiple requests.

API Access and Rate Limits

Beyond pricing, rate limits constrain API usage velocity—requests per minute, tokens per minute, and daily/monthly caps vary significantly across platforms and subscription tiers. ChatGPT’s API through OpenAI offers tiered rate limits based on usage history and payment tier, with newer accounts starting at lower limits that increase over time as consistent payment history establishes trust. Claude’s API provides similar graduated access with rate limits increasing at higher usage tiers (tier 4 unlocks the 1-million-token context window). Gemini’s API through Google Cloud offers the most generous free tier with up to 1,000 daily requests for experimentation, making it attractive for initial development before committing to paid plans.

For production deployment, law firms should plan for rate limit constraints affecting peak usage scenarios. Client intake systems experiencing surges after advertising campaigns or news events may hit rate limits without proper load balancing across multiple API keys or strategic queuing systems. Additionally, error handling becomes critical—requests failing with 400 or 500 errors typically aren’t charged for consumed tokens, but retry logic must account for rate limit responses (429 status codes) with appropriate exponential backoff to avoid cascading failures.

How to Get Cited by AI Platforms

The Shift from SEO to GEO

Generative Engine Optimization (GEO) represents a fundamental evolution from traditional Search Engine Optimization (SEO), shifting focus from ranking positions to citation probability. While SEO concentrates on securing high rankings in search engine results pages so users can click through to websites, GEO focuses on getting content cited and quoted directly in AI-generated responses. According to research published by ADRA Tech Systems in January 2026, this distinction matters because AI platforms synthesize information from multiple sources to create comprehensive answers rather than presenting ranked lists of links—being cited means your content is deemed trustworthy enough to stand beside the answer itself, influencing brand recall and downstream conversions even when no direct click occurs.

However, GEO does not replace SEO but complements it. Analysis by TheMarketers in November 2025 demonstrates that Google sends 345 times more traffic than ChatGPT, Gemini, and Perplexity combined, while 76.1% of AI Overview citations also rank in Google’s top 10 traditional search results. Strong SEO foundations directly feed GEO visibility because technical optimizations—site speed, schema markup, quality content—benefit both traditional and AI-powered discovery. The recommended distribution for law firms in 2026 involves balanced investment: 50% effort on traditional SEO for immediate traffic generation and 50% effort on GEO for future-proofing as AI adoption accelerates. This strategic balance ensures firms capture today’s clients while positioning for tomorrow’s AI-driven discovery patterns.

Technical Requirements for AI Citations

Technical infrastructure forms the foundation for AI citation success, with three critical elements consistently appearing across optimization research. First, page speed under 2 seconds represents a hard threshold—DreamHost’s December 2025 analysis found sites exceeding 2-second load times experience 40% lower citation rates regardless of content quality. Second, clean HTML structure with proper semantic markup enables AI platforms to parse content efficiently, with schema markup acting as detailed labels telling AI exactly what content represents. Third, crawler accessibility ensures AI platforms can actually reach content—blocking GPTBot, ClaudeBot, PerplexityBot, or Google-Extended in robots.txt eliminates citation opportunities entirely.

Schema markup implementation should encompass comprehensive entity establishment across relevant types: Attorney for individual lawyers with credentials and practice areas, LegalService for service offerings with areaServed properties, Organization for firm-level information with sameAs social profile links, and FAQPage for structured question-answer content. According to Siftly’s January 2026 research, FAQ sections with proper schema markup get cited 200% more often than regular content, while structured data implementation combined with clear heading hierarchy increases citation probability by 195%. For law firms, implementing the comprehensive schema framework outlined in InterCore’s 200-point SEO technical audit checklist provides the technical foundation upon which content optimization builds.

✅ Technical Citation Requirements Checklist

- Page speed: Load time under 2 seconds on mobile and desktop

- Mobile responsiveness: Fully functional mobile experience with readable text

- HTTPS security: Valid SSL certificate with no mixed content warnings

- Schema markup: Attorney, LegalService, Organization, FAQPage types implemented

- Structured headings: Proper H1-H4 hierarchy with descriptive, question-based headings

- Crawler access: Allow GPTBot, ClaudeBot, PerplexityBot, Google-Extended in robots.txt

- XML sitemap: Updated sitemap submitted to search engines and accessible to crawlers

- Clean URL structure: Descriptive URLs without excessive parameters or session IDs

Content Structure for AI Extraction

Generative engines extract answers rather than ranking pages, requiring fundamentally different content structure than traditional SEO. The “answer capsule” technique has emerged as the most effective AI optimization strategy according to ALM Corp’s December 2025 comprehensive guide—placing comprehensive, standalone answers immediately after primary headings before any introductory context or background information. For example, an article addressing “What is statute of limitations in California personal injury cases?” should immediately provide: “California’s statute of limitations for personal injury cases is generally two years from the date of injury under California Code of Civil Procedure § 335.1, with specific exceptions for discovery of harm, minors, and government entities.”

Self-contained content units (SCUs) represent another critical structural requirement. AI platforms may extract individual paragraphs or sections without surrounding context, meaning each paragraph must be independently comprehensible. Research from Geneo published in 2025 demonstrates the importance of avoiding phrases like “as mentioned above” because AI might only extract that specific fragment, rendering the content meaningless without prior context. For law firms, this means structuring practice area pages with each section independently explaining services, qualifications, process steps, and outcomes rather than assuming readers arrived through the homepage and read sequentially.

Question-based headings directly aligned with user queries significantly improve citation rates. Testing by ChatGPT optimization specialists shows that headings phrased as questions users actually ask—”How much does a divorce attorney cost in Los Angeles?”—perform better than generic headings like “Pricing Information.” Additionally, including specific statistics with source attributions, comparison tables for evaluating options, and step-by-step process explanations creates multiple “grab points” where AI platforms can extract valuable, citable information. Content formats that consistently achieve higher citation rates include structured lists (numbered steps, bulleted features), comparison tables with clear categories, FAQ sections with 5-10 questions, and case study formats demonstrating real outcomes.

Authority Signals That Drive Citations

AI platforms favor credible sources, making authority signal optimization essential for citation success. Detailed author bios with credentials, professional licenses, years of experience, and social profile links increase citation probability by 110% according to Siftly’s research. For law firms, this means comprehensive attorney biography pages with State Bar numbers, law school education, practice area certifications, published articles, speaking engagements, and professional association memberships. Additionally, citing authoritative external sources within content—referencing court decisions, statutory law, bar association guidelines, academic research—enhances perceived trustworthiness even when those citations aren’t extracted into AI responses.

Content freshness represents another critical authority signal, particularly for Perplexity which rewards aggressive update schedules more than any other platform. According to DreamHost’s December 2025 analysis, content updated within the past 30 days receives preferential citation treatment across all platforms, with freshness signals including published dates displayed prominently, “last updated” timestamps showing recent maintenance, and regular additions of new case results or legal developments. For law firms, implementing systematic content refresh cycles—updating practice area pages quarterly with recent case outcomes, refreshing FAQ sections monthly with new questions from client interactions, and publishing weekly blog posts on current legal topics—maintains the freshness signals AI platforms prioritize.

External authority signals including backlinks from reputable legal publications, mentions on industry websites, and strong social media presence contribute to overall domain authority evaluated by AI platforms. Analysis published by GreenBananaSEO in November 2025 found approximately 90% of ChatGPT citations come from sources outside the top 20 traditional search results, highlighting that depth and structure matter more than ranking position alone. However, strong backlink profiles from .edu and .gov domains, citations from legal news publications, and active participation on platforms AI systems frequently cite (Reddit, LinkedIn, YouTube) create multiple pathways to citation consideration beyond on-page optimization.

Platform-Specific Optimization Strategies

While foundational optimization principles apply across platforms, each AI system demonstrates unique preferences requiring platform-specific strategies. For ChatGPT optimization, focus on freshness through regular content updates, strong Bing search rankings (87%+ citation alignment), and comprehensive content addressing questions thoroughly in single pages rather than fragmenting information across multiple pages. ChatGPT’s Browse with Bing feature means newly published content can achieve citations rapidly if it provides exceptional answers to current queries—an advantage for law firms publishing timely analysis of new legislation, recent court decisions, or breaking legal developments.

Perplexity optimization requires emphasis on source diversity—publishing across multiple platforms (your website, Medium, LinkedIn articles, YouTube videos) increases citation opportunities because Perplexity draws from broader source sets than competitors. Building genuine presence on platforms Perplexity frequently cites (Reddit for community discussions, YouTube for video content, LinkedIn for professional thought leadership) creates multiple citation pathways. Additionally, Perplexity’s 2-3 day content refresh responsiveness means maintaining an aggressive update schedule for priority pages yields measurable improvements in citation rates.

For Google Gemini and AI Overviews, leveraging Google’s ecosystem provides strategic advantages. Ensuring Google Business Profile completeness with regular posts, reviews, and Q&A responses, optimizing for Google’s existing knowledge graph through consistent NAP (Name, Address, Phone) information across all citations, and maintaining active YouTube presence with legal education content all contribute to Gemini citation consideration. Gemini’s preference for Reddit and Medium content creates opportunities for law firms willing to engage authentically on these platforms—providing valuable legal information, answering community questions, and building reputation through helpfulness rather than self-promotion.

Claude optimization benefits from content demonstrating clear reasoning and step-by-step explanations of not just what to do but why and how. The platform responds particularly well to logical flow and detailed methodology sections, making it ideal for complex legal process explanations, comprehensive case evaluation frameworks, and thorough analysis of legal strategy considerations. For law firms serving sophisticated clientele seeking detailed understanding rather than surface-level information, investing in Claude-optimized long-form content addressing complex topics comprehensively creates differentiation in a market often dominated by simplified explanations.

Measurement Framework for AI Citation Success

Measuring AI citation success requires dual approaches tracking both referral traffic and visibility without clicks. For measurable referrals, implementing custom channel groupings in Google Analytics 4 that bucket referrals from chatgpt.com, perplexity.ai, and other AI platforms above standard “Referral” traffic enables tracking of sessions, engaged sessions, and conversions specifically from AI sources. According to Geneo’s best practices guide, comparing AI/LLM traffic against organic search establishes baseline expectations and demonstrates the value proposition of AI visibility investments to stakeholders.

For visibility without clicks—citations that influence brand awareness without generating immediate traffic—weekly manual checks across target queries provide qualitative data. Systematically testing 20-50 priority queries across ChatGPT, Perplexity, Claude, and Google AI Overviews, logging whether your firm appears cited, which specific URLs get referenced, and which competitor firms appear alongside yours creates longitudinal data revealing citation pattern trends. Capturing screenshots and archiving responses enables historical comparison showing progress over time as optimization efforts compound.

Example Measurement Framework

- Baseline documentation: Before implementation, test 20-50 relevant queries across ChatGPT, Perplexity, Google AI Overviews, Claude, and Copilot. Document current citation rate, competitor presence, and specific queries where your firm appears versus doesn’t appear.

- Query set definition: Define target queries based on practice areas and locations using actual client intake question patterns. Include commercial intent queries (“hire [practice area] attorney in [city]”), informational queries (“how does [legal process] work in [state]”), and comparison queries (“best [practice area] lawyers in [region]”).

- Measurement cadence: Weekly spot checks on 5-10 priority queries for rapid feedback, monthly comprehensive testing of the full query set for trend analysis, and quarterly competitive analysis documenting changes in competitor citation patterns.

- Reporting metrics: Track mention rate (percentage of queries where your firm appears), citation rate (percentage where you’re actively cited in the response body), accuracy rate (percentage where information about your firm is correct), and competitor comparison (your mentions versus top 3 competitors in your market).

- Traffic validation: Monitor GA4 AI/LLM traffic channel for referral sessions, compare conversion rates between AI-referred traffic versus organic search to quantify quality differences, and attribute revenue to AI sources using standard conversion tracking methodologies.

For law firms seeking more sophisticated measurement without manual testing overhead, specialized platforms like Siftly, Otterly AI, and Profound provide automated tracking across multiple AI platforms with regular reporting. These solutions track query sets automatically, provide competitive intelligence comparing your citations against competitors, and integrate with existing analytics platforms to correlate citation visibility with traffic and conversion outcomes. InterCore’s AI marketing automation services include comprehensive measurement frameworks combining automated tracking with strategic analysis of citation patterns to optimize ongoing GEO efforts.

Frequently Asked Questions

Which AI platform has the largest context window in 2026?

Google Gemini offers the largest readily available context window at 2 million tokens for Gemini 1.5 Pro as of January 2026. Other Gemini models including 2.5 Flash and 3 Pro provide 1-million-token context windows. Claude Sonnet 4 and 4.5 offer 1-million-token capacity in beta for qualified organizations (usage tier 4 or custom rate limits), while standard Claude models provide 200,000 tokens. ChatGPT maintains 128,000-token context windows across GPT-4o and GPT-5 families, the smallest among major platforms but compensated by cross-session memory features and real-time web search integration.

However, advertised context window size doesn’t always correlate with practical performance. AIMultiple’s systematic testing revealed that most models become unreliable around 60-70% of advertised capacity, with Claude Sonnet 4 demonstrating exceptional consistency with less than 5% accuracy degradation across its full 200,000-token window. For law firms, selecting platforms based on effective context utilization rather than maximum advertised capacity often yields better real-world performance.

Does having strong Google rankings guarantee AI citation visibility?

Strong Google rankings significantly increase AI citation probability but don’t guarantee visibility. Research published by ADRA Tech Systems in January 2026 found that 76.1% of Google AI Overview citations also rank in Google’s top 10 traditional search results, demonstrating strong correlation between SEO performance and AI visibility. However, GreenBananaSEO’s November 2025 analysis revealed approximately 90% of ChatGPT citations come from sources outside the top 20 search results, indicating AI platforms evaluate content quality and structure beyond ranking position alone.

The relationship works both ways: technical foundations benefiting SEO (page speed, schema markup, quality content, authoritative backlinks) also improve AI citation probability, creating synergistic effects where SEO and GEO investments compound. Law firms should maintain balanced 50/50 investment allocation between traditional SEO for immediate traffic generation and GEO for future-proofing as AI adoption accelerates, rather than viewing these as competing priorities requiring resource trade-offs.

How long does it take to see results from GEO optimization efforts?

GEO optimization timelines vary based on implementation complexity and starting point. Quick wins including schema markup implementation, content restructuring with answer capsules, and robots.txt adjustments can show initial results within 2-3 weeks as AI crawlers re-index updated content. According to TheMarketers’ November 2025 analysis, technical infrastructure improvements and header reformatting typically demonstrate measurable citation increases within 4-6 weeks of implementation.

Building comprehensive topical authority through content clusters typically requires 3-6 months of consistent publication and optimization. Establishing consistent branding across external platforms (Reddit, LinkedIn, YouTube) where AI systems frequently cite requires ongoing effort but can start driving citations within 2-3 months of active participation. For law firms starting from minimal AI visibility, expect 90-120 days for meaningful citation rates on priority queries, with continued improvement over 6-12 months as content repository expands and domain authority compounds across multiple AI platforms.

What’s the most cost-effective AI platform for law firm API integration?

Gemini Flash-Lite offers the lowest entry pricing at $0.10 per million input tokens and $0.40 per million output tokens as of January 2026, making it the most cost-effective option for high-volume applications where flagship model quality isn’t required. Grok 4.1 provides comparable low pricing at $0.20/$0.50 per million tokens but with less established performance track record. For applications requiring balance between cost and capability, Gemini 2.5 Pro at $1.25/$10 per million tokens delivers strong performance at approximately 60% lower cost than ChatGPT’s GPT-4o ($5/$15) according to IntuitionLabs’ comprehensive pricing analysis.

However, total cost optimization requires strategic model selection beyond base pricing. Using cost-efficient models for 70% of routine tasks (client intake questions, basic case evaluation) while reserving premium models (Claude Opus, GPT-4o) for 30% of complex analysis tasks typically yields 60-75% cost reduction versus all-in flagship model deployment. Additionally, implementing prompt caching (supported by Claude and Gemini) reduces costs by 75% for repeated prompt components, while batch processing for non-urgent requests often qualifies for 50% pricing discounts across platforms.

Should law firms optimize for all AI platforms or focus on specific ones?

Foundational optimization—technical infrastructure, content structure, authority signals—benefits all platforms simultaneously, making comprehensive multi-platform optimization more efficient than commonly assumed. According to research by TheMarketers published November 2025, approximately 80% of GEO best practices apply universally across ChatGPT, Claude, Gemini, Perplexity, and Copilot. Implementing core requirements (page speed optimization, schema markup, question-based content structure, self-contained paragraphs, clear authorship) creates baseline eligibility for citations across the entire AI ecosystem without platform-specific customization.

Platform-specific optimization becomes valuable after establishing foundational competence. For B2B-focused law firms serving corporate clients (employment law, corporate transactional work), prioritizing Perplexity and Claude optimization makes strategic sense given their professional user bases. Consumer-focused practices (personal injury, family law, estate planning) should prioritize ChatGPT and Google AI Overviews given their mass-market adoption. However, given strong user growth across all platforms and citation pattern diversity, maintaining presence across the full ecosystem prevents over-dependence on any single platform’s algorithm changes or market position shifts.

How do output token limits affect practical AI applications for law firms?

Output token limits constrain response length independently from input context windows, creating practical limitations for applications requiring extensive outputs. ChatGPT’s GPT-4 and GPT-4o models cap maximum output at 4,096 tokens (approximately 3,000 words) despite 128,000-token input capacity, meaning comprehensive document analysis might require summarization rather than detailed point-by-point responses. Claude models support up to 8,192 output tokens (approximately 6,000 words), while Gemini 3 Pro offers 64,000 output tokens enabling generation of extensive content more comparable to input document length.

For law firms, these limits impact use cases differently. Client intake chatbots typically function well within output constraints since individual responses rarely exceed 500-1,000 words. However, applications generating comprehensive legal memoranda, detailed case analysis, or contract drafting may require iterative approaches breaking large outputs into sequential prompts. Strategic prompt engineering—requesting outlines first, then detailed sections individually—works around output limits while maintaining coherent results. When evaluating AI integration projects, matching model selection to required output length prevents architectural limitations discovered only after substantial development investment.

Ready to Dominate AI Platform Citations?

InterCore Technologies has pioneered Generative Engine Optimization for law firms since 2002, combining 23+ years of AI development experience with proven strategies for achieving visibility across ChatGPT, Claude, Gemini, Perplexity, and emerging platforms. Our developer-led approach implements the technical architecture and content frameworks that drive sustainable AI citation success.

📞 Phone: (213) 282-3001

✉️ Email: sales@intercore.net

📍 Address: 13428 Maxella Ave, Marina Del Rey, CA 90292

References

- IBM. (November 17, 2025). “What is a context window?” IBM Think Topics. Available at: https://www.ibm.com/think/topics/context-window (Accessed January 27, 2026)

- AIMultiple. (2025). “Best LLMs for Extended Context Windows in 2026.” AI Context Window Research. Available at: https://research.aimultiple.com/ai-context-window/ (Accessed January 27, 2026)

- WhatLLM. (January 2026). “Best Long Context LLMs January 2026: AI Models for Large Documents.” Available at: https://whatllm.org/blog/best-long-context-models-january-2026 (Accessed January 27, 2026)

- AllAboutAI.com. (November 6, 2025). “How does Context Window Size Affect Prompt Performance of LLMs? [Tested].” Available at: https://www.allaboutai.com/geo/context-window-size/ (Accessed January 27, 2026)

- IntuitionLabs. (December 3, 2025). “AI API Pricing Comparison (2025): Grok, Gemini, ChatGPT & Claude.” Available at: https://intuitionlabs.ai/articles/ai-api-pricing-comparison-grok-gemini-openai-claude (Accessed January 27, 2026)

- ADRA Tech Systems. (January 2026). “GEO: How to Rank on ChatGPT, Perplexity & Google AI | 2026 Guide.” Available at: https://adratechsystems.com/en/resources/geo-generative-engine-optimization-complete-guide (Accessed January 27, 2026)

- Siftly. (January 2026). “AEO Guide 2026: Rank on Perplexity, Claude & AI Search.” Available at: https://siftly.ai/blog/answer-engine-optimization-perplexity-claude-2026 (Accessed January 27, 2026)

- DreamHost. (December 5, 2025). “Get Your Website Cited by AI: ChatGPT, Claude, & Perplexity.” Available at: https://www.dreamhost.com/blog/ai-citations/ (Accessed January 27, 2026)

- Geneo. (2025). “How to Get Content Cited by ChatGPT & Perplexity: Agency Best Practices.” Available at: https://geneo.app/blog/ai-optimized-content-cited-chatgpt-perplexity-best-practices/ (Accessed January 27, 2026)

- GreenBananaSEO. (November 5, 2025). “How to Get Your Website Cited by ChatGPT, Gemini, Claude and Perplexity?” Available at: https://greenbananaseo.com/how-to-get-cited-in-chatgpt/ (Accessed January 27, 2026)

- TheMarketers. (November 28, 2025). “Boost AI Visibility: Rank on ChatGPT, Claude.” Available at: https://thesmarketers.com/blogs/boost-ai-visibility-chatgpt-claude-perplexity/ (Accessed January 27, 2026)

- ALM Corp. (December 25, 2025). “How to Rank on ChatGPT, Perplexity, and AI Search Engines: The Complete Guide to Generative Engine Optimization.” Available at: https://almcorp.com/blog/how-to-rank-on-chatgpt-perplexity-ai-search-engines-complete-guide-generative-engine-optimization/ (Accessed January 27, 2026)

- Profound. (August 2025). “AI Platform Citation Patterns: How ChatGPT, Google AI Overviews, and Perplexity Source Information.” Available at: https://www.tryprofound.com/blog/ai-platform-citation-patterns (Accessed January 27, 2026)

- WEZOM. (December 17, 2025). “AI Models Comparison 2026: Claude, ChatGPT or Gemini.” Available at: https://wezom.com/blog/chatgpt-vs-claude-vs-gemini-best-ai-model-in-2026 (Accessed January 27, 2026)

- DataStudios. (August 29, 2025). “AI: how large language models handle extended context windows (ChatGPT, Claude, Gemini…).” Available at: https://www.datastudios.org/post/ai-how-large-language-models-handle-extended-context-windows-chatgpt-claude-gemini (Accessed January 27, 2026)

- Sentisight. (January 2026). “2026 AI Subscription Prices: Gemini vs ChatGPT vs Claude.” Available at: https://www.sentisight.ai/ai-price-comparison-gemini-chatgpt-claude-grok/ (Accessed January 27, 2026)

- Google Cloud. (2026). “Vertex AI Pricing.” Google Cloud Documentation. Available at: https://cloud.google.com/vertex-ai/generative-ai/pricing (Accessed January 27, 2026)

Conclusion

The 2026 LLM landscape demonstrates remarkable technical diversity across context windows, architectural approaches, and optimization requirements. Google Gemini’s 2-million-token capacity enables comprehensive document analysis unmatched by competitors, while Claude’s consistent 200,000-token performance with minimal degradation provides reliability for precision tasks, and ChatGPT’s 128,000-token window combined with cross-session memory creates personalized experiences compensating for smaller context capacity. Understanding these technical capabilities enables strategic platform selection aligned with specific use cases rather than assuming larger context windows universally deliver superior results.

For law firms, AI platform visibility through citation optimization represents a fundamental shift in digital marketing strategy requiring balanced investment between traditional SEO and emerging GEO practices. The 76.1% overlap between Google top-10 rankings and AI Overview citations demonstrates synergy between optimization approaches, while the 90% of ChatGPT citations from sources outside top-20 rankings reveals opportunities for firms lacking dominant SEO positions. Technical infrastructure (page speed, schema markup, crawler access), content structure (answer capsules, self-contained units, question-based headings), and authority signals (fresh updates, detailed author bios, external citations) create the foundation upon which platform-specific optimizations build.

As AI adoption accelerates—with ChatGPT alone serving 800 million weekly users and Google AI Overviews appearing on 55%+ of searches—the competitive advantage accrues to firms implementing systematic GEO strategies now rather than reacting after competitors establish citation dominance. InterCore Technologies’ 23+ years of AI development experience positions us uniquely to guide law firms through this transition, implementing the technical architecture and content frameworks that drive sustainable visibility across the entire AI ecosystem. Explore our comprehensive legal marketing resources to understand how GEO integrates with broader digital marketing strategies for law firms in the AI era.

Author: Scott Wiseman, CEO & Founder

Published: January 27, 2026

Last Updated: January 27, 2026

Reading Time: 18 minutes